Unlocking WASI: The Future of Serverless with WebAssembly

Discover how WASI is transforming serverless computing with secure, portable WebAssembly runtimes for the cloud era.

Dev Orbit

June 11, 2025

Why This WebAssembly Matters

WebAssembly has long been seen as a browser technology. But with the WebAssembly System Interface (WASI), we're now entering a new era where WebAssembly powers secure, lightweight and portable workloads beyond the browser. In this deep dive, we’ll explore how WASI works, why cloud architects and backend engineers are adopting it for serverless applications and how it’s reshaping emerging cloud architectures.

The Serverless Bottleneck — And Why WASI Changes the Game

The rise of serverless computing promised a world without servers, scaling worries or infrastructure headaches. Platforms like AWS Lambda, Google Cloud Functions and Azure Functions allow developers to focus entirely on code.

But there’s a catch:

🔄 Cold starts slow down performance.

🔐 Security boundaries between functions and host OS are complex.

🌐 Language/runtime limitations often force developers into polyglot stacks.

💰 Resource usage overhead can inflate costs for high-frequency workloads.

Enter WebAssembly System Interface (WASI): a standardized, lightweight runtime specification that offers near-native performance, portable binaries and hardened security models — directly addressing many of these serverless pain points.

The Big Promise:

WASI unlocks secure, sandboxed and extremely fast serverless workloads — regardless of the host OS, hardware architecture or programming language.

What Exactly Is WASI? (And Why You Should Care)

WebAssembly (Wasm) was originally designed to run high-performance code inside browsers. Its compact binary format allows code to execute at near-native speeds while maintaining strong sandboxing and security boundaries.

However, Wasm alone isn't enough to build full-fledged applications outside the browser because it lacks system-level access (e.g., files, networking, environment variables).

That’s where WASI comes in.

WASI in Simple Terms:

WASI is a standardized API layer that allows WebAssembly modules to securely interact with system resources like files, sockets, clocks and random number generators — without exposing dangerous OS-level privileges.

Think of WASI like a universal adapter:

🎯 Portable: Build once, run anywhere (bare metal, VMs, edge, cloud)

🔒 Secure: Sandboxed by default

🏃 Fast: Near-native execution speeds

🛠️ Polyglot: Supports multiple programming languages

The Core Architecture of WASI

Let’s visualize how WASI works at runtime:

Description: Diagram showing a WebAssembly module running inside a WASI-compliant runtime. The runtime interfaces with the host OS via controlled syscalls (file I/O, networking, etc.), maintaining strict sandboxing between the Wasm module and the host kernel.

At its core:

WebAssembly Module: Compiled binary (e.g., from Rust, C, AssemblyScript, TinyGo)

WASI Runtime: Middleware that provides WASI APIs (examples: Wasmtime, Wasmer, WasmEdge)

Host OS: Provides actual system resources but only through carefully controlled WASI calls

Key WASI Capabilities

Capability | WASI Status |

|---|---|

Filesystem Access | ✅ Available via virtual FS mounting |

Environment Variables | ✅ Supported |

Random Number Generation | ✅ Secure PRNG |

Networking | 🟡 Emerging standards (WASI Sockets Proposal) |

Threads | 🟡 Experimental proposals (WASI Threads) |

Crypto APIs | 🟡 Proposal stage |

✅ Stable | 🟡 Emerging

How WASI is Powering the Next Wave of Serverless Innovation

1️⃣ Serverless Runtimes Simplified

Traditional serverless runtimes (e.g., Node.js, Python) rely on heavy language runtimes, OS abstractions and container overhead. WASI allows for:

Smaller binary sizes (often <5MB)

Instant cold-start times

Predictable resource usage

Language flexibility (Rust, Zig, TinyGo, C/C++)

2️⃣ Edge Computing Ready

WASI excels at edge deployments:

Portable binaries mean you can run workloads close to the user.

Extremely low memory footprints ideal for resource-constrained edge nodes.

Projects like Cloudflare Workers and Fermyon Spin leverage WASI to power edge-native serverless platforms.

3️⃣ Security by Default

Strict syscall whitelisting

No arbitrary system calls allowed

Minimal attack surface compared to full OS containers

No need for privileged escalations

✅ Best Practice: Always use precompiled WebAssembly modules signed and validated via secure CI pipelines to prevent supply-chain risks.

WASI in Action: Building a Serverless WebAssembly Function

Let’s walk through a simplified example:

Use Case: Image Resizing Microservice

Why WebAssembly?

Fast image processing (compiled native code)

Lightweight deployment (<10MB total)

Portable across clouds and edge

Tech Stack:

🚀 Language: Rust

🧩 Runtime: Wasmtime

🖼️ Library:

imagecrate for Rust

use image::io::Reader as ImageReader;

use image::ImageOutputFormat;

use std::io::Cursor;

pub fn resize_image(input_bytes: &[u8], width: u32, height: u32) -> Vec<u8> {

let img = ImageReader::new(Cursor::new(input_bytes))

.with_guessed_format()

.unwrap()

.decode()

.unwrap();

let resized = img.resize(width, height, image::imageops::FilterType::Lanczos3);

let mut output = Vec::new();

resized.write_to(&mut Cursor::new(&mut output), ImageOutputFormat::Png).unwrap();

output

}Compile to WebAssembly using wasm-pack or cargo build --target wasm32-wasi.

Deploy via WASI-compatible serverless providers, e.g.:

Fermyon Spin

WasmCloud

Suborbital Compute

Cloudflare Workers (limited WASI subset)

Description: Flowchart showing CI/CD pipeline compiling Rust → WebAssembly → WASI module → Deployed to edge nodes / serverless provider with runtime orchestration.

Real-World WASI Adoption Examples

1️⃣ Fermyon Spin

Spin enables developers to deploy WASI-powered serverless microservices directly at the edge with sub-millisecond cold starts.

📈 Performance gains:

Cold start: <1ms

Deployment artifact: ~2MB

2️⃣ Cloudflare Workers (Partial WASI)

Cloudflare has introduced limited WebAssembly-based runtimes that leverage some WASI-like principles, allowing Rust and other languages to be deployed as near-native edge functions.

3️⃣ Fastly Compute@Edge

Built on Wasmtime, enabling sub-millisecond request handling, secure sandboxing and easy deployment pipelines using Wasm modules.

Advanced WASI Tips, Gotchas & Emerging Standards

💡 Performance Tips:

Prefer languages with mature WebAssembly toolchains (Rust, Zig, TinyGo)

Avoid unnecessary heap allocations for low-latency functions

Use streaming APIs for large file I/O via WASI proposals

⚠️ Current Limitations:

Full networking support is still under active standardization.

Multi-threading and concurrency models remain experimental.

No full filesystem access outside sandbox mounts.

📌 Emerging Innovations:

WASI Preview 2: Expanding interface capabilities

Component Model: Compose multiple Wasm modules across languages

WASIX: A Linux-like superset for broader syscalls while retaining portability

✅ Best Practice: Monitor proposals via WASI Subgroup at W3C to stay aligned with spec maturity.

Conclusion: Why WASI Belongs On Your Serverless Roadmap

As serverless computing continues to evolve, WASI offers a compelling pathway for cloud architects, backend engineers and serverless developers:

✅ Faster cold starts

✅ Smaller deployable artifacts

✅ Stronger security models

✅ True cloud portability

✅ Better developer experience across languages

💬 Found this useful?

🔁 Share with your dev team.

Enjoyed this article?

Subscribe to our newsletter and never miss out on new articles and updates.

More from Dev Orbit

10 JavaScript Quirks That Look Wrong (But Are Actually Right)

This article dives deep into ten surprising quirks of JavaScript that might confuse developers, especially those new to the language. From unexpected behavior with type coercion to peculiarities in operator precedence, we will clarify each aspect with real-world examples and practical implications. By understanding these quirks, developers can write cleaner and more efficient code, avoiding common pitfalls along the way.

Top AI Tools to Skyrocket Your Team’s Productivity in 2025

As we embrace a new era of technology, the reliance on Artificial Intelligence (AI) is becoming paramount for teams aiming for high productivity. This blog will dive into the top-tier AI tools anticipated for 2025, empowering your team to automate mundane tasks, streamline workflows, and unleash their creativity. Read on to discover how these innovations can revolutionize your workplace and maximize efficiency.

Mastering Git Hooks for Automated Code Quality Checks and CI/CD Efficiency

Automate code quality and streamline your CI/CD pipelines with Git hooks. This step-by-step tutorial shows full-stack developers, DevOps engineers, and team leads how to implement automated checks at the source — before bad code ever hits your repositories.

Event-Driven Architecture in Node.js

Event Driven Architecture (EDA) has emerged as a powerful paradigm for building scalable, responsive, and loosely coupled systems. In Node.js, EDA plays a pivotal role, leveraging its asynchronous nature and event-driven capabilities to create efficient and robust applications. Let’s delve into the intricacies of Event-Driven Architecture in Node.js exploring its core concepts, benefits, and practical examples.

Are AIs Becoming the New Clickbait?

In a world where online attention is gold, the battle for clicks has transformed dramatically. As artificial intelligence continues to evolve, questions arise about its influence on content creation and management. Are AIs just the modern-day clickbait artists, crafting headlines that lure us in without delivering genuine value? In this article, we delve into the fascinating relationship between AI and clickbait, exploring how advanced technologies like GPT-5 shape engagement strategies, redefine digital marketing, and what it means for consumers and content creators alike.

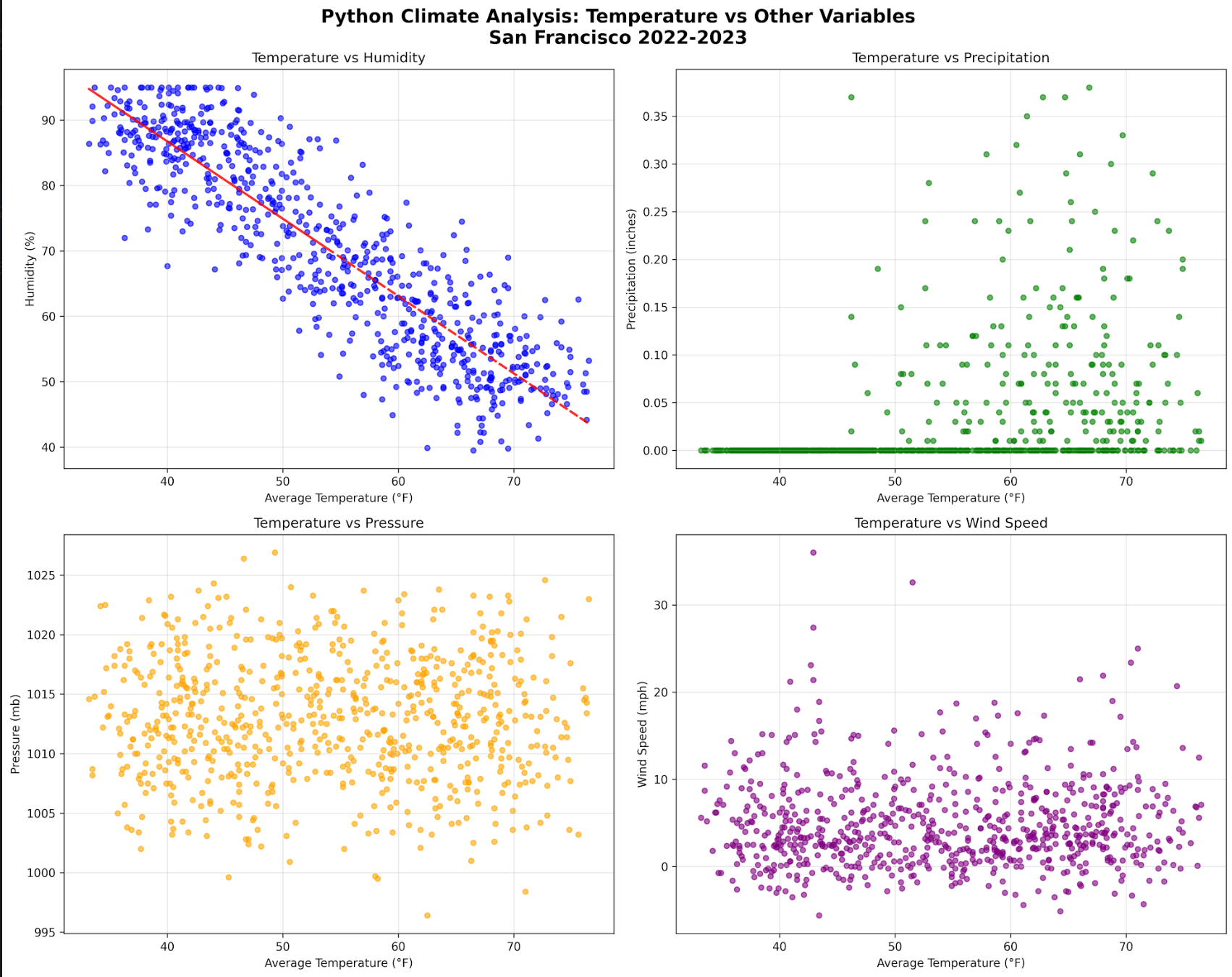

Python vs R vs SQL: Choosing Your Climate Data Stack

Delve into the intricacies of data analysis within climate science by exploring the comparative strengths of Python, R and SQL. This article will guide you through selecting the right tools for your climate data needs, ensuring efficient handling of complex datasets.

Releted Blogs

Best Cloud Hosting for Python Developers in 2025 (AWS vs GCP vs DigitalOcean)

Finding the Right Python Cloud Hosting in 2025 — Without the Headaches Choosing cloud hosting as a Python developer in 2025 is no longer just about uptime or bandwidth. It’s about developer experience, cost efficiency and scaling with minimal friction. In this guide, we’ll break down the top options — AWS, GCP and DigitalOcean — and help you make an informed choice for your projects.

A Beginner’s Guide to AWS EC2 and AWS Lambda: When and Why to Use Them

Confused between EC2 and Lambda? This beginner-friendly guide breaks down their core differences, use cases, pros and cons and helps you choose the right service for your application needs.

How to Build an App Like SpicyChat AI: A Complete Video Chat Platform Guide

Are you intrigued by the concept of creating your own video chat platform like SpicyChat AI? In this comprehensive guide, we will walk you through the essentials of building a robust app that not only facilitates seamless video communication but also leverages cutting-edge technology such as artificial intelligence. By the end of this post, you'll have a clear roadmap to make your video chat application a reality, incorporating intriguing features that enhance user experience.

Spotify Wrapped Is Everything Wrong With The Music Industry

Every year, millions of Spotify users eagerly anticipate their Spotify Wrapped, revealing their most-listened-to songs, artists and genres. While this personalized year-in-review feature garners excitement, it also highlights critical flaws in the contemporary music industry. In this article, we explore how Spotify Wrapped serves as a microcosm of larger issues affecting artists, listeners and the industry's overall ecosystem.

Have a story to tell?

Join our community of writers and share your insights with the world.

Start Writing